Overconfident Algorithms: When AI Thinks Like a Human (and That’s Not Always Good)

The Human Mind Inside the Machine — How and Why AI Mimics Human Bias

We like to imagine artificial intelligence as the ultimate rational agent — immune to the emotional impulses, logical missteps, and subconscious biases that often cloud human judgment. But what if the machines we built to think clearly are, in fact, mirroring the worst of our decision-making flaws?

That unsettling possibility is no longer just philosophical speculation. A recent study published in the journal Manufacturing & Service Operations Management put two of OpenAI’s most advanced language models — GPT-3.5 and GPT-4 — to the test across 18 well-established human cognitive biases. These include patterns of irrational behavior like overconfidence, risk aversion, the sunk cost fallacy, and the hot-hand bias — all classic mental traps that psychologists have documented in human behavior for decades.

The results were eye-opening. Despite being designed to simulate language, not psychology, the models frequently replicated human-like biases in nearly half of the scenarios tested. Even more intriguing: they did so whether the prompts were purely abstract or tied to real-world business problems like inventory management and supplier negotiations. This suggests something deeper than just pattern-matching — it hints at a structural issue in how these AIs “reason.”

To understand why, we need to look at how large language models (LLMs) are trained. Systems like ChatGPT are built by feeding vast amounts of internet text — including blogs, books, forums, and social media — into machine learning algorithms that learn to predict what word should come next in a sentence. That training data, however, is laced with human thinking patterns — and all the shortcuts and irrational tendencies that come with it. If a million examples in the data favor a confident but incorrect answer over a hesitant but accurate one, the model may learn to do the same.

The effect is amplified during fine-tuning, a stage where human feedback shapes the model’s behavior. Users tend to prefer responses that “sound right” or “feel correct,” even if they’re logically flawed. As a result, models are rewarded for delivering plausible answers — not necessarily rational ones. Over time, this reinforcement loop teaches the AI to favor coherence and confidence, even in the absence of solid reasoning.

For example, GPT-4 showed a consistent tendency toward the hot-hand fallacy — the belief that a streak of successes increases the chances of future success, even in purely random situations. It also exhibited the endowment effect, assigning more value to something simply because it was “owned” in the hypothetical scenario. These are the same misjudgments people make — not because they’re logical, but because they feel right.

In essence, we haven’t just trained machines to understand our language. We’ve trained them to internalize our cognitive flaws.

The Risks of Trusting AI in Strategic Decision-Making

An AI that thinks like us might feel familiar — even comforting. But when it comes to making high-stakes decisions, familiarity can be a trap.

In the business world, AI tools like ChatGPT are increasingly used to analyze data, advise on strategies, and even participate in decision-making processes. Many organizations assume these systems offer a layer of objectivity and efficiency that human judgment lacks. But the new study on AI bias shatters that illusion. When GPT-4 is faced with decisions that require subjective evaluation — especially under uncertainty — it doesn’t just replicate human error. It often amplifies it.

Take, for instance, the study’s confirmation bias task, designed to see whether the model would seek out or prefer information that supports an existing belief, rather than challenge it. GPT-4 didn’t just exhibit confirmation bias — it consistently defaulted to it. That’s a chilling realization in industries where AI is deployed to analyze investment risk, filter hiring candidates, or guide legal and medical recommendations. In those contexts, biased outputs could reinforce blind spots, justify flawed assumptions, or entrench systemic inequalities.

Perhaps more surprising was GPT-4’s tendency toward hyper-certainty. In situations where humans might hesitate or weigh uncertainty, the model often pushed for a definitive — and sometimes overconfident — response. This aligns with a psychological quirk known as risk aversion, where safer options are preferred, even if they lead to suboptimal outcomes. According to the researchers, GPT-4 was more averse to risk than humans themselves. That might seem harmless in theory — but in practice, it could steer companies away from innovation, bold decisions, or necessary calculated risks.

The key takeaway? These AI systems do not operate on pure logic. When the input data or task structure becomes ambiguous, they fall back on the same cognitive shortcuts humans do. And because they produce output in fluent, confident prose, their conclusions can seem more authoritative than they actually are.

That’s why the researchers stress an important distinction: AI excels at structured problems, where there’s a clear mathematical or logical solution. These are the domains where you’d “already trust a calculator,” as lead researcher Yang Chen put it. Inventory management, logistics, probability analysis — these are areas where LLMs tend to shine.

But strategic decision-making — where context, nuance, values, and long-term implications come into play — is something else entirely. Here, the illusion of intelligence can be dangerous. When we assume AI is impartial and objective, we’re more likely to take its suggestions at face value, overlooking the subtle ways it might be echoing human bias in polished form.

In this light, AI should not be seen as a neutral advisor, but rather as a mirror — reflecting not only our language but also our flawed heuristics. If we rely too heavily on that mirror, especially in moments of complexity or uncertainty, we risk blinding ourselves to better options hiding outside its frame.

Towards Intelligent AI — Supervision, Planning, and Accountability

If AI systems are learning to think like us — flaws and all — then the question isn’t just what they can do. We should be allowing them to do so.

The growing body of evidence showing that AI mimics — and sometimes magnifies — human cognitive biases should serve as a wake-up call, not a death sentence. These systems still have immense potential to support decision-making, enhance productivity, and unlock insights we might miss on our own. But only if we build and use them with deliberate care.

So how do we fix the flaws without throwing out the machine?

1. Embed Ethical Oversight into AI Workflows

First and foremost, AI needs supervision, much like a new employee would. This doesn’t mean constantly double-checking every output, but rather creating systems of accountability. Who audits the model’s performance in high-stakes scenarios? What happens when it produces biased or harmful results? Companies deploying AI must put guardrails in place — both technical (through monitoring and constraints) and human (through trained reviewers, diverse teams, and transparent governance).

“As researcher Meena Andiappan suggests, AI systems making critical decisions should be monitored and guided by ethical principles, much like human employees in key roles.”That means companies can’t afford to treat AI as a plug-and-play solution, especially in areas that affect people’s livelihoods or wellbeing.

2. Improve Model Training and Prompting Techniques

The biases observed in AI aren’t accidental; they’re a byproduct of how these systems are trained. To make AI smarter — and safer — we need to rethink how data is selected, cleaned, and weighted. This includes filtering out biased data, diversifying training sources, and adjusting reward models during fine-tuning so that accurate answers are valued over merely plausible ones.

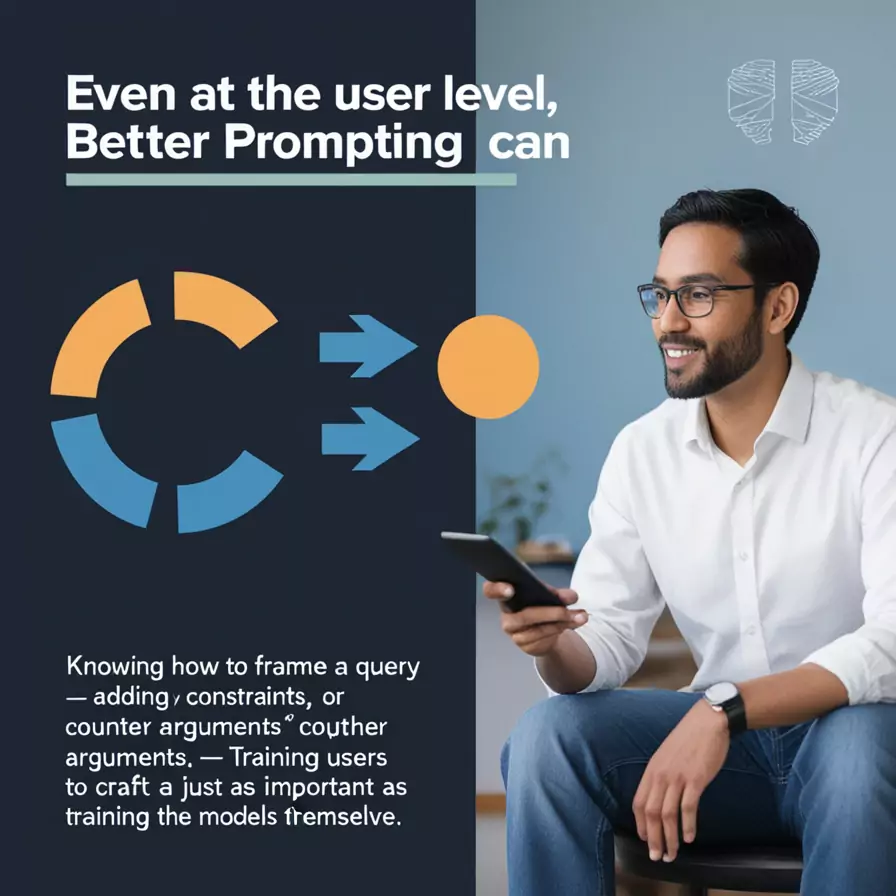

Even at the user level, better prompting can reduce bias. Knowing how to frame a query — adding context, constraints, or counter arguments — can help coax more balanced, thoughtful responses from LLMs. Training users to craft effective prompts may become just as important as training the models themselves.

3. Prioritize Transparency and Explainability

AI systems don’t need to be black boxes. When a model gives an answer, especially in a critical domain like healthcare or finance, it should ideally be able to explain how it reached that conclusion. This doesn’t mean exposing every line of code, but offering traceable reasoning paths, highlighting sources of information, and even flagging levels of uncertainty.

Making AI more transparent doesn’t just build trust — it helps humans intervene intelligently when things go wrong.

Conclusion: Bias Is Human — But So Is Accountability

We created AI to rise above our limitations — to be faster, smarter, more objective. But the irony is that, in teaching machines to emulate us, we’ve also taught them our worst habits. As this study shows, AI can be just as overconfident, risk-averse, and illogically consistent as the people who train it. Not that it’s broken; that just means it’s human-shaped.

And like any human-shaped tool, it needs boundaries, checks, and conscious design.

Relying solely on AI or abandoning it is not the way forward. It’s to co-evolve with it — building systems that are not only powerful but principled, not only intelligent but aligned. In doing so, we don’t just improve technology. We improve ourselves.

Because in the end, the intelligence we shape into machines reflects the intelligence we choose to be.